Zach Seward, editorial director of AI Initiatives at The New York Times, discussed the perils, but mostly the promises, of AI for journalists, in a talk at NYU’s Arthur L. Carter Journalism Institute on April 8.

In a lunch-time event hosted by NYU’s Ethics and Journalism Initiative, Seward shared, in his words, how news organizations are “using AI in both supremely dumb and incredibly smart ways — and lessons we can draw from both types of examples that illuminate the most ethical and effective applications for journalism.”

At The New York Times, Seward leads a team responsible for new forms of journalism made possible by artificial intelligence. Previously, he was a co-founder of the business news organization Quartz, where he held various leadership roles, including chief product officer, editor in chief, and CEO, over more than a decade. He has also worked at The Wall Street Journal and Harvard’s Nieman Journalism Lab.

Beginning with some appalling examples of AI gone wrong, Seward summarized what we can take away from these missteps: “There are some common qualities across these bad examples: the copy is unchecked; the approach, as lazy as possible; the motivation, entirely selfish; the presentation, dishonest and/or opaque.”

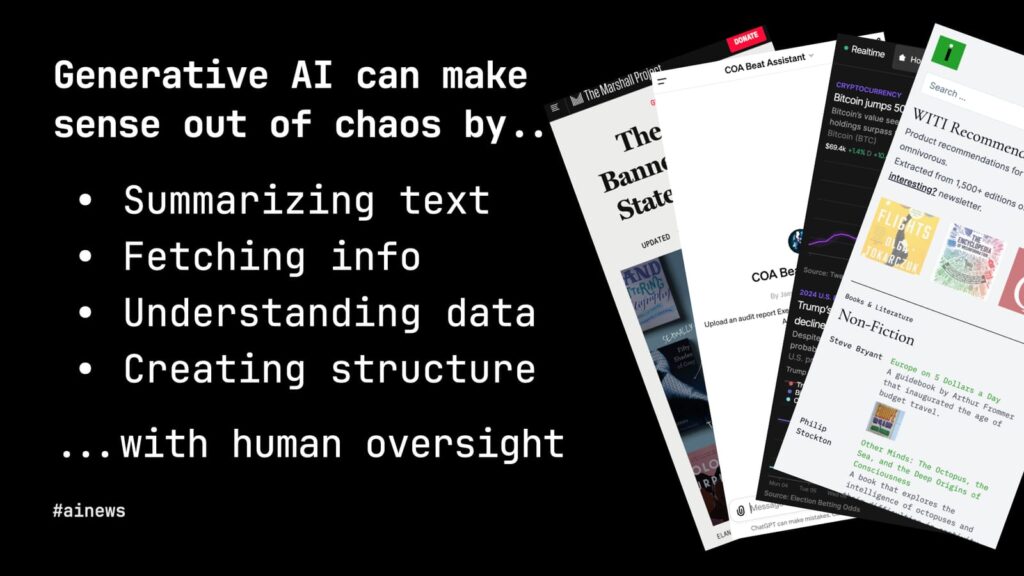

Then he offered some great examples of how news organizations are using “traditional” machine-learning models and generative AI – not for doing our writing or thinking but for summarizing text, fetching information, understanding data, and creating structure.

In a lively Q&A, Seward noted that, so far, he sees ethical issues surrounding AI closely tracking broader ethical issues in journalism: the need to be transparent about one’s sources and how they know what they know; the need to verify facts independently, not just trust any given source (in this case, AI); and the imperative to bring careful, human judgment to complex news tasks, such as interpreting the meaning of a Supreme Court decision that has just been handed down.

In a lively Q&A, Seward noted that, so far, he sees ethical issues surrounding AI closely tracking broader ethical issues in journalism: the need to be transparent about one’s sources and how they know what they know; the need to verify facts independently, not just trust any given source (in this case, AI); and the imperative to bring careful, human judgment to complex news tasks, such as interpreting the meaning of a Supreme Court decision that has just been handed down.

His talk and slides are available at zachseward.com.